Nota

Haga clic en aquí para descargar el código completo del ejemplo o para ejecutar este ejemplo en su navegador a través de Binder

Ejemplo de reconocimiento de rostros mediante eigenfaces y SVM¶

El conjunto de datos utilizado en este ejemplo es un extracto preprocesado de «Labeled Faces in the Wild», también conocido como LFW:

Resultados esperados para las 5 personas más representadas en el conjunto de datos:

Ariel Sharon |

0.67 |

0.92 |

0.77 |

13 |

Colin Powell |

0.75 |

0.78 |

0.76 |

60 |

Donald Rumsfeld |

0.78 |

0.67 |

0.72 |

27 |

George W Bush |

0.86 |

0.86 |

0.86 |

146 |

Gerhard Schroeder |

0.76 |

0.76 |

0.76 |

25 |

Hugo Chavez |

0.67 |

0.67 |

0.67 |

15 |

Tony Blair |

0.81 |

0.69 |

0.75 |

36 |

avg / total |

0.80 |

0.80 |

0.80 |

322 |

Out:

Total dataset size:

n_samples: 1288

n_features: 1850

n_classes: 7

Extracting the top 150 eigenfaces from 966 faces

done in 0.857s

Projecting the input data on the eigenfaces orthonormal basis

done in 0.015s

Fitting the classifier to the training set

done in 23.195s

Best estimator found by grid search:

SVC(C=1000.0, class_weight='balanced', gamma=0.005)

Predicting people's names on the test set

done in 0.084s

precision recall f1-score support

Ariel Sharon 0.75 0.46 0.57 13

Colin Powell 0.81 0.87 0.84 60

Donald Rumsfeld 0.86 0.67 0.75 27

George W Bush 0.85 0.98 0.91 146

Gerhard Schroeder 0.95 0.80 0.87 25

Hugo Chavez 1.00 0.60 0.75 15

Tony Blair 0.97 0.81 0.88 36

accuracy 0.86 322

macro avg 0.88 0.74 0.80 322

weighted avg 0.87 0.86 0.85 322

[[ 6 2 0 5 0 0 0]

[ 1 52 1 6 0 0 0]

[ 1 2 18 6 0 0 0]

[ 0 3 0 143 0 0 0]

[ 0 1 0 3 20 0 1]

[ 0 3 0 2 1 9 0]

[ 0 1 2 4 0 0 29]]

from time import time

import logging

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.datasets import fetch_lfw_people

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.decomposition import PCA

from sklearn.svm import SVC

print(__doc__)

# Display progress logs on stdout

logging.basicConfig(level=logging.INFO, format='%(asctime)s %(message)s')

# #############################################################################

# Download the data, if not already on disk and load it as numpy arrays

lfw_people = fetch_lfw_people(min_faces_per_person=70, resize=0.4)

# introspect the images arrays to find the shapes (for plotting)

n_samples, h, w = lfw_people.images.shape

# for machine learning we use the 2 data directly (as relative pixel

# positions info is ignored by this model)

X = lfw_people.data

n_features = X.shape[1]

# the label to predict is the id of the person

y = lfw_people.target

target_names = lfw_people.target_names

n_classes = target_names.shape[0]

print("Total dataset size:")

print("n_samples: %d" % n_samples)

print("n_features: %d" % n_features)

print("n_classes: %d" % n_classes)

# #############################################################################

# Split into a training set and a test set using a stratified k fold

# split into a training and testing set

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.25, random_state=42)

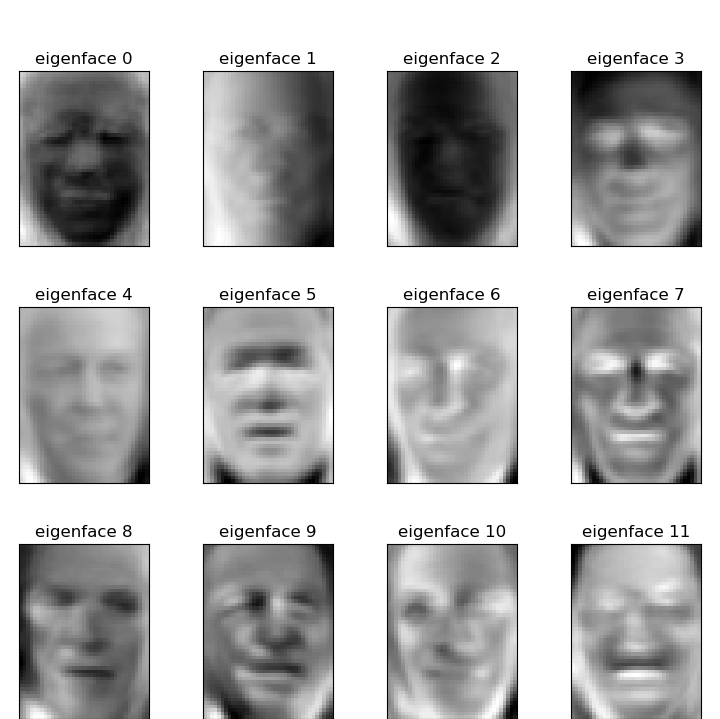

# #############################################################################

# Compute a PCA (eigenfaces) on the face dataset (treated as unlabeled

# dataset): unsupervised feature extraction / dimensionality reduction

n_components = 150

print("Extracting the top %d eigenfaces from %d faces"

% (n_components, X_train.shape[0]))

t0 = time()

pca = PCA(n_components=n_components, svd_solver='randomized',

whiten=True).fit(X_train)

print("done in %0.3fs" % (time() - t0))

eigenfaces = pca.components_.reshape((n_components, h, w))

print("Projecting the input data on the eigenfaces orthonormal basis")

t0 = time()

X_train_pca = pca.transform(X_train)

X_test_pca = pca.transform(X_test)

print("done in %0.3fs" % (time() - t0))

# #############################################################################

# Train a SVM classification model

print("Fitting the classifier to the training set")

t0 = time()

param_grid = {'C': [1e3, 5e3, 1e4, 5e4, 1e5],

'gamma': [0.0001, 0.0005, 0.001, 0.005, 0.01, 0.1], }

clf = GridSearchCV(

SVC(kernel='rbf', class_weight='balanced'), param_grid

)

clf = clf.fit(X_train_pca, y_train)

print("done in %0.3fs" % (time() - t0))

print("Best estimator found by grid search:")

print(clf.best_estimator_)

# #############################################################################

# Quantitative evaluation of the model quality on the test set

print("Predicting people's names on the test set")

t0 = time()

y_pred = clf.predict(X_test_pca)

print("done in %0.3fs" % (time() - t0))

print(classification_report(y_test, y_pred, target_names=target_names))

print(confusion_matrix(y_test, y_pred, labels=range(n_classes)))

# #############################################################################

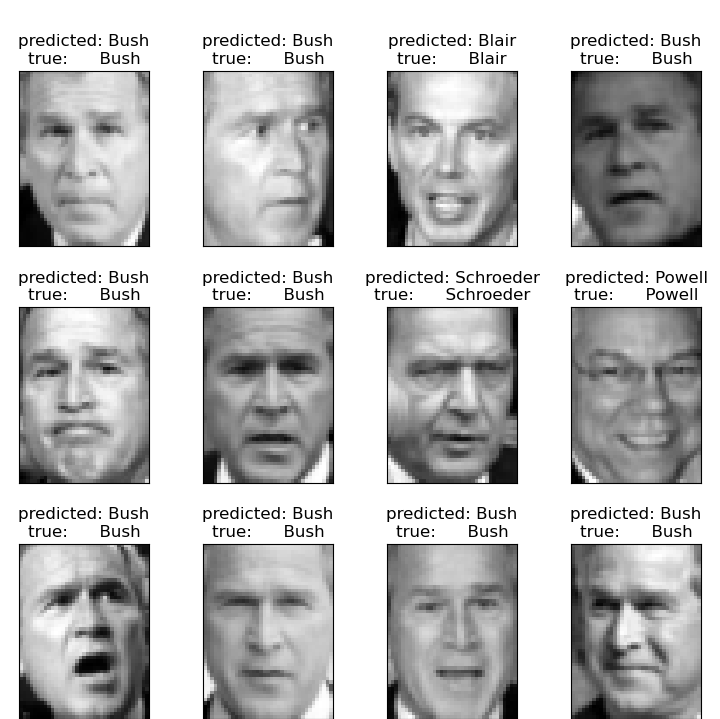

# Qualitative evaluation of the predictions using matplotlib

def plot_gallery(images, titles, h, w, n_row=3, n_col=4):

"""Helper function to plot a gallery of portraits"""

plt.figure(figsize=(1.8 * n_col, 2.4 * n_row))

plt.subplots_adjust(bottom=0, left=.01, right=.99, top=.90, hspace=.35)

for i in range(n_row * n_col):

plt.subplot(n_row, n_col, i + 1)

plt.imshow(images[i].reshape((h, w)), cmap=plt.cm.gray)

plt.title(titles[i], size=12)

plt.xticks(())

plt.yticks(())

# plot the result of the prediction on a portion of the test set

def title(y_pred, y_test, target_names, i):

pred_name = target_names[y_pred[i]].rsplit(' ', 1)[-1]

true_name = target_names[y_test[i]].rsplit(' ', 1)[-1]

return 'predicted: %s\ntrue: %s' % (pred_name, true_name)

prediction_titles = [title(y_pred, y_test, target_names, i)

for i in range(y_pred.shape[0])]

plot_gallery(X_test, prediction_titles, h, w)

# plot the gallery of the most significative eigenfaces

eigenface_titles = ["eigenface %d" % i for i in range(eigenfaces.shape[0])]

plot_gallery(eigenfaces, eigenface_titles, h, w)

plt.show()

Tiempo total de ejecución del script: (23 minutos 7.844 segundos)